Reflections on Midjourney

Last Thursday I paid $30 for a subscription to Midjourney, the AI image generation tool created by a team of 11 or so people. In return, I received access to unfettered AI image generation. Founded by David Holz, previous cofounder of Leap Motion, Midjourney is HUGE and getting bigger by the day. They have 10’s of thousands of GPUs running and their Discord server has around 13.3 million people in it. That was just 2 million people 6 months ago. They have no venture funding.

I’d played with Stable Diffusion before, another image generation app. I’d loaded it on an old gaming laptop with a GPU and typed some prompts into the command-line. It was super slow on my GPU and I remember loading up 50 prompts, putting them in and letting it run overnight. The next morning was like Christmas finding all the crazy stuff it had generated.

Midjourney, on the other hand, is entirely interfaced with via Discord right now. Any server that has Midjourney in it, you can simply enter /imagine and then type away a “prompt” of what you’d like to see. For example, Steve Jobs dunking a basketball into the net:

Ok, so the net is kinda…wonky, but it’s got the right idea. Look at that photorealism!

It’s addicting, fun and scary. It feels like magic. I honestly haven’t had this much fun with computers in a while. It feels like your first gaming system or cell-phone. Completely new and exciting. Unreal.

How does an AI generate images?

Caution: I’m not an AI expert and this is probably full of inaccuracies. DYOR.

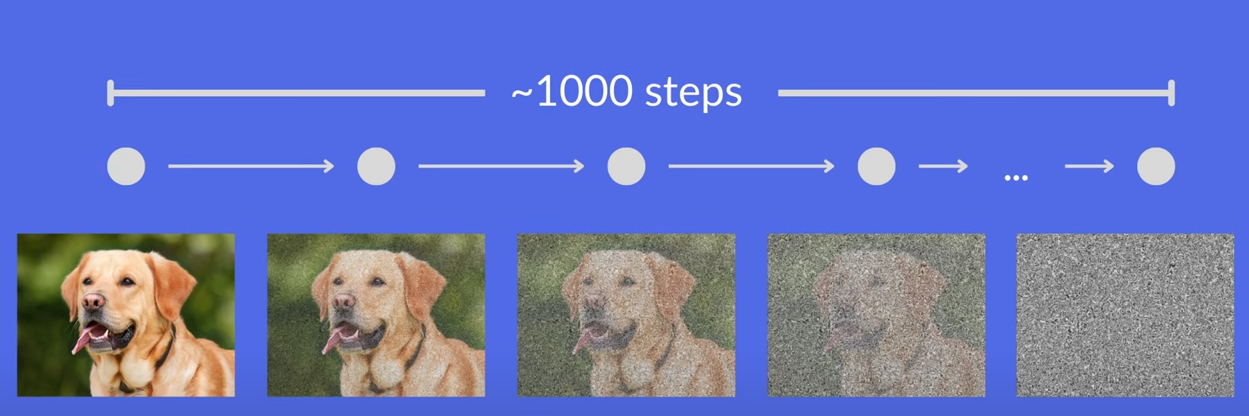

Midjourney and other AI image generation models work by diffusion. This means that they take in training images and add noise to them until they are basically all just noise.

This process is done on millions of images that were scraped from the web to build a training set and Machine Learning model (there’s a whole nother conversation here on copyright and intellectual property). The Machine Learning model is much smaller than the corpus of images it was trained on.

This video by computerphile is also worth a watch on understanding Diffusion Models. I need to give it a re-watch myself.

The process to then generate images works backwards with some random noise and does some math to determine what most likely is in the image based on the prompt. Then it slowly generates an image.

This is not a great explanation I know, but it’s the best I can do right now. Maybe I’ll dive deeper later and write another post about it once I have a good handle on it.

Back to the cool stuff Midjourney can create and some of it’s implications.

My Favorite Images

Since last Thursday, I’ve generated > 1500 AI images, playing with prompts and adding phrases about cameras having to do with focal lengths and lens types. I don’t know anything about cameras or lenses, but, it’s quite fun to switch words around and see what will influence a prompt.

Below are some of my favorite images I’ve made with Midjourney:

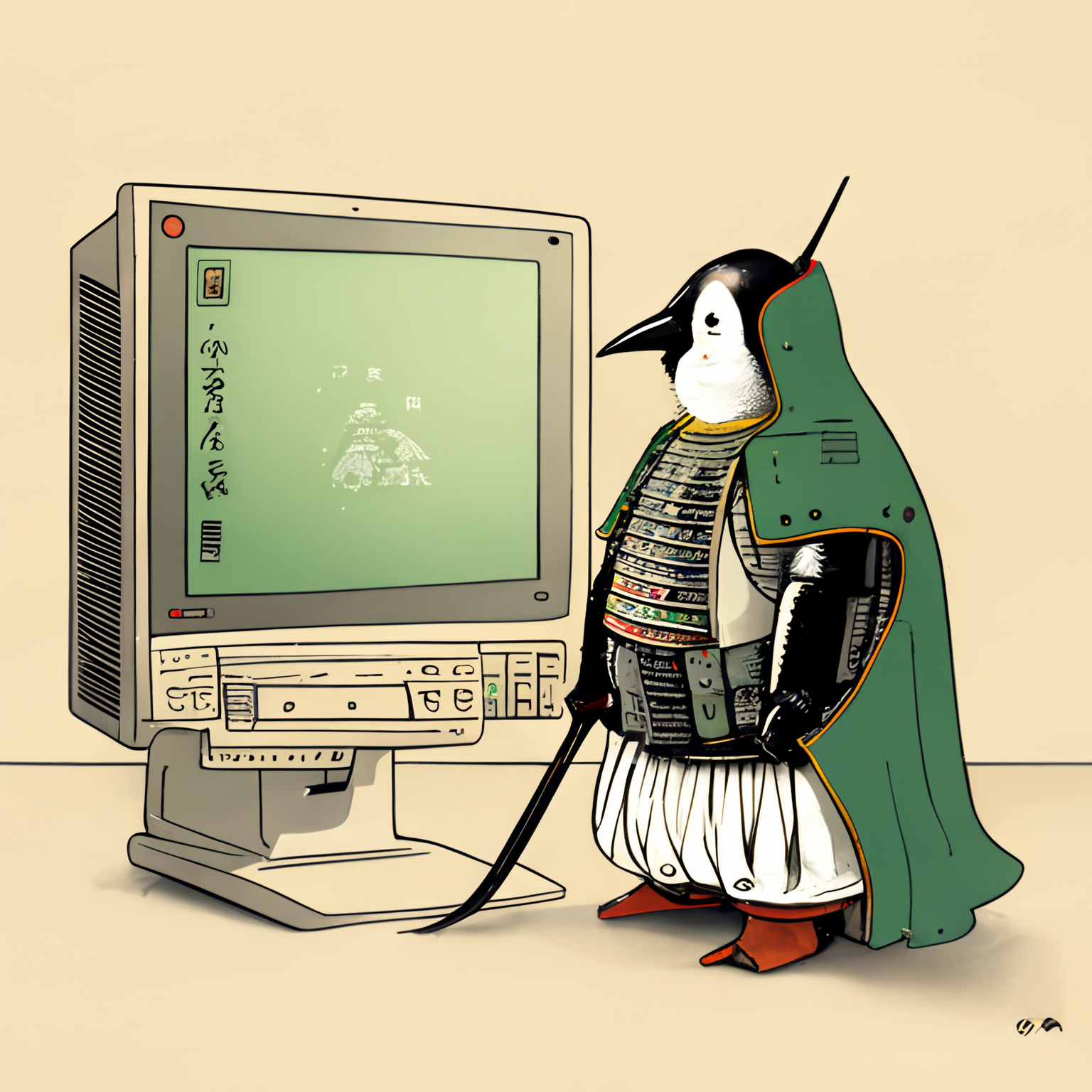

I used the below image as the cover for an e-book I recently wrote called Shell Samurai.

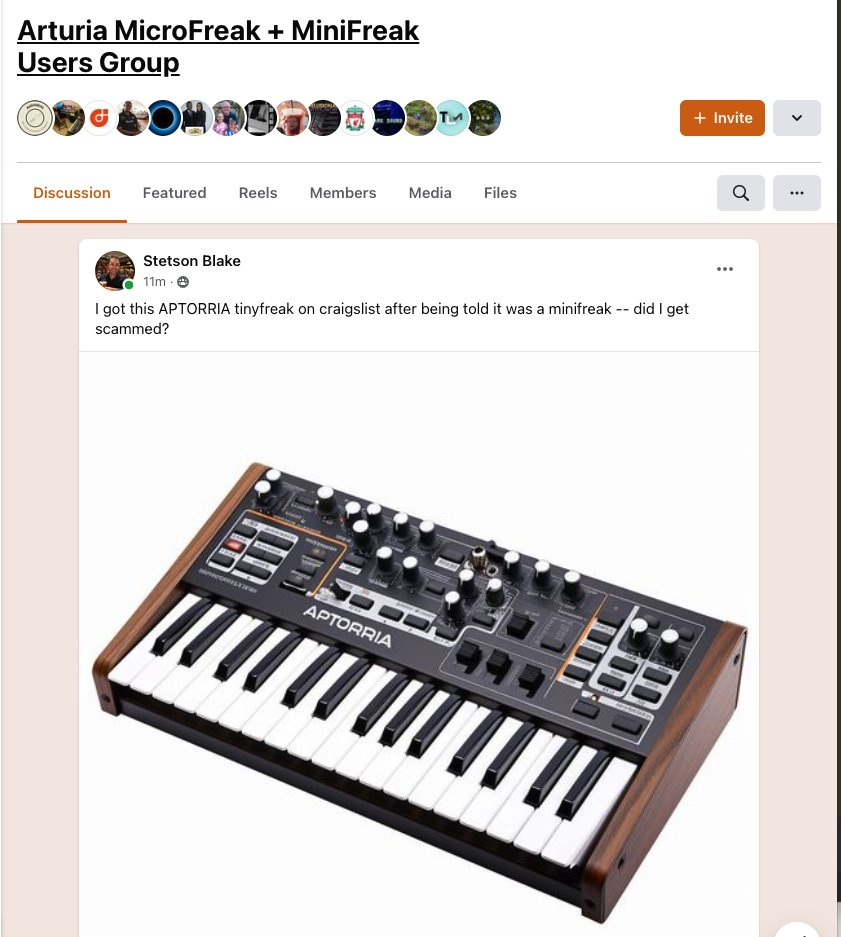

I like to play with Synthesizers and various devices that make noise. One of them is the Arturia Microfreak. I decided to plug some prompts into Midjourney with names of these devices and see what it’d spit out. Midjourney then generates an image of a device that’s never been seen before…although it has remnants of real devices.

I’m a little bit of a troll so I enjoy posting the images to Facebook groups dedicated to that hardware and telling people I got it on Craigslist. Lots of people know about AI image generation of course, so they usually pick up on the joke.

Most of the above images were generated with Midjourney V4. Just 2 days ago, Midjourney v5 was released and blew everyone away. v4 of Midjourney would allow you to put in a short phrase and whatever you got back was really random. The art style wouldn’t be just photos, but drawings and cartoons, weird colors, etc.

Midjourney v5 is much more opinionated and by default seems to generate “real photos” that look like they’re taken with a camera. I haven’t quite gotten the hang of prompting with it yet because it requires you to be super-specific.

Here’s some insane v5 images I’ve generated:

I’m not really sure what the implications are of AI imagery. It’s exciting and scary. I have no doubt it’s going to impact every single industry. What happens to stock photo websites who want to charge $250 for a photo of people curling when I can just generate my own?

What about Deepfakes? You won’t be able to trust any image you see online as being authentic. Will there be an “image signature” of some sort that we can use to verify authenticity? It seems like a big problem. What kind of ownership will news sites have to take and disclose when using images?

Is AI going to take our jobs away?

I haven’t even covered ChatGPT here. ChatGPT is a LLM or Large Language Model. They’re trained on words and text and interact with users in a conversational manner. They work really well, but can give some inaccuracies in what they say, so caution must be used.

I’ve used ChatGPT for learning programming languages or spitting out code that mostly works, but needs some modification. You can give ChatGPT a prompt like “write me some Ruby code that will use the watir library to load a browser, take an input of domains, visit each website and scrape any emails it finds” and it’ll do it. It’s remarkable.

I don’t think Software Development jobs are at a huge risk in the near-term future from AI. You still have to know concepts at a lower-level and be able to ask the LLM what you need. You need to translate business needs into the actual concrete things that need done.

I DO think that LLMs will become a standard tool in the belt of knowledge workers everywhere, just like a web browser, text editor or spreadsheet program. I think that learning to use these tools will be a super-power, and then common place. They increase the bandwidth for learning. I can’t do complicated things in Google Sheets easily. Where I’d google it before and piece the knowledge together myself, I can now give my problem to an LLM and have it explain what to do.

I saw a comment on Hacker News recently (because of course I did) that LLMs were like having access to a PhD at all times of the day that you can ask anything. Even if the LLM is wrong 20% of the time, maybe the tradeoff is worth it because you have constant access to it. A PhD might be wrong 5-10% of the time, but their time is sacred and limited.

I don’t want to completely consign away my thinking to a computer. I think that thought process could make you more ignorant in ways. Have you ever used Google Maps so much that you don’t really ever know your way around? Sometimes it’s good to turn off the computer and get lost.

Farnam Street had an excellent blog post about this:

Writing is the process by which you realize that you do not understand what you are talking about. Importantly, writing is also the process by which you figure it out.

Perhaps we can apply the same concept to LLMs. Making an AI generate all the code I write could be a dangerous pattern…but asking AI to explain concepts might be a healthier relationship.

Outro

What does this all mean? I’m not really sure. Right now, I’m just having fun generating silly images. Is that art? I dunno. It sure is fun though.